Ask any content marketer to define "high quality" and you'll get the same answer: well-researched, expertly written, professionally designed, thoroughly edited. But if you look at what actually performs across different channels and formats, that’s not always true.

When a new medium or channel emerges, our gut instinct is to apply the quality standards of what came before. But it rarely works. Early TV producers tried to apply radio broadcasting standards to television. The first websites were essentially just digital brochures.

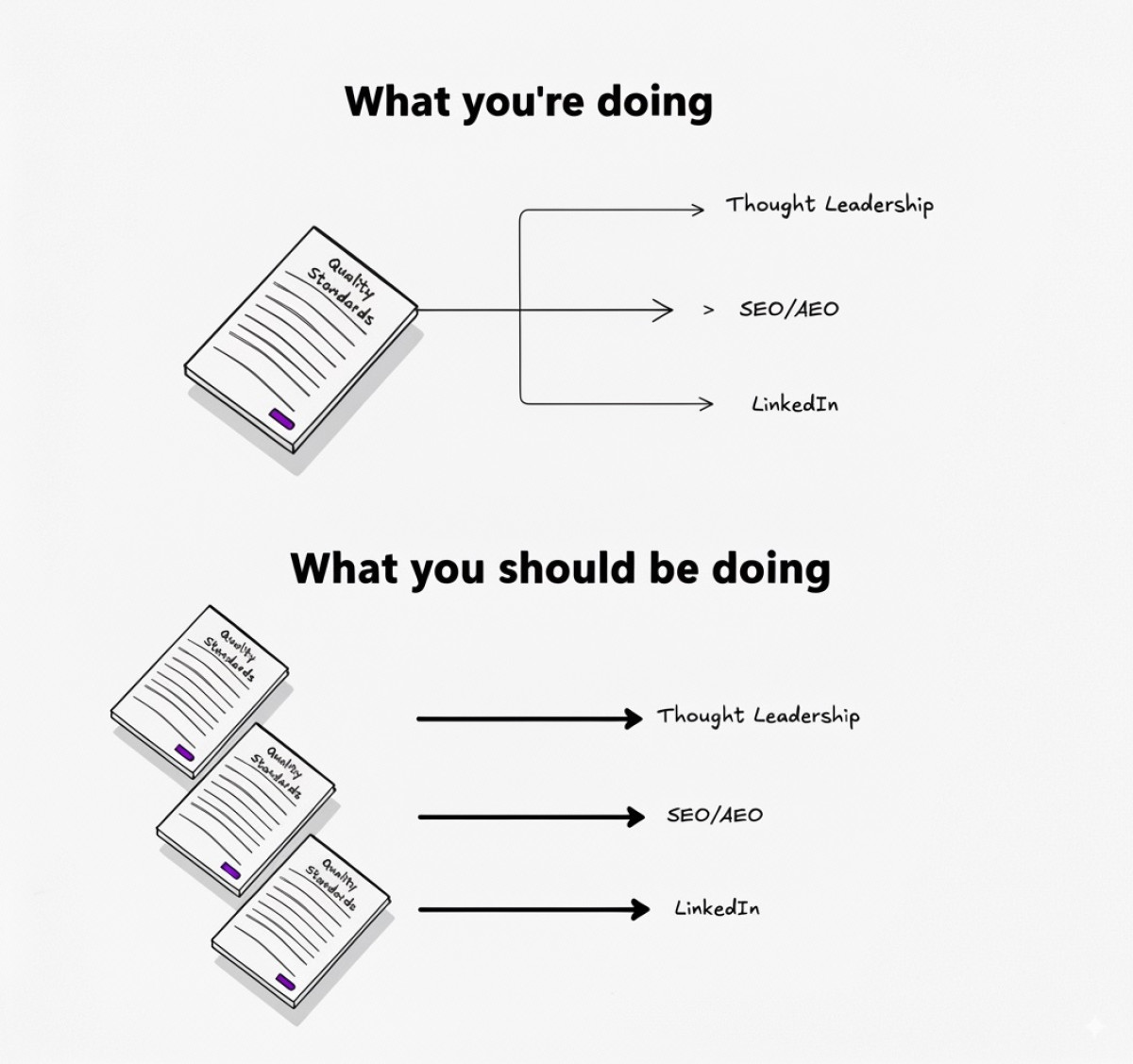

Each medium, channel, and format evolves its own definition of quality. Often such rules are unspoken, and, what's particularly confusing about our profession, is that they’ve persisted across many channels and formats for a long time. The fundamentals of good writing looked similar across print, books, and blogs for decades.

Yet now, suddenly, it seems, the rules are even more unspoken and confusing than before. All lowercase is authentic on X and for tech bosses. Some obvious AI-generated text patterns are accepted on LinkedIn if the idea resonates. And who knows what AEO algorithms prefer?

We need to rethink how we define "high quality" at every turn.

The same content can be high or low quality depending on the situation. Our job is to identify what quality actually means for each channel, format, and context, and we have to revisit such definitions much more often than before.

One Channel's Quality Is Another's Miss

Let me explain with a brief example: TikTok and Addison Rae.

For anyone over the age of 35, Addison Rae is a TikTok dancer-turned-singer who rose to fame in 2019. Videos of her doing short dances garnered her nearly 60 million followers by 2020.

At the peak of her fame, she went on Jimmy Fallon to do a medley of trending TikTok dances. It bombed. It was awkward to watch and, I imagine, perform. The audience’s cheers were strained. Rae’s smile only seemed to get more forced and more pained as the segment went on for an excruciating 2 minutes and 12 seconds.

Now, this segment had all the hallmarks of what TV people consider high quality. It was shot on studio cameras, on a soundstage, with the hottest TikTok star of her time, who, as far as I could tell, didn’t miss a single move. The music was played live by The Roots, for heaven’s sake. It checked all the boxes. And still watching it made you feel… weird. (It has since been scrubbed from both Fallon and Rae’s social accounts, but can still be found.)

The reason: it was “high quality” for the wrong context. TikTok dances are shot on an iPhone (9:16 aspect ratio compared to TV’s 16:9), near the subject, wide angle, often in a bedroom or kitchen, and, at the time, lasted 60 seconds max. At their best, a good TikTok is a little sloppy. And that’s the point. That’s the context for that channel. That’s what’s quality on that channel.

This same concept applies to B2B SaaS content.

Quality = Purpose + Context

The challenge, then, is identifying when and where to apply which standards. The way we think of it is: Quality is the intersection of purpose and context.

Purpose: What specific outcome does this content need to achieve? SEO content needs to capture traffic and convert. Whitepapers need to build credibility with enterprise buyers. LinkedIn posts need to drive engagement and position expertise. Different purposes require completely different quality criteria.

Context: Who's consuming this, where, and what do they expect? Search users want quick answers. C-suite executives expect authority signals. LinkedIn audiences value authenticity over polish. Context determines which quality markers actually matter.

When you nail both purpose and context, you can ignore traditional quality rules that don't serve your specific situation. Miss either one, and even "high quality" content fails — or at best wastes time and resources.

This explains why that one-size-fits-all quality checklist your team uses doesn't work. Why some of your most polished content gets ignored while rougher pieces drive results. Why copying what works for other companies can backfire.

Where the Same "Quality" Rules Create Different Results

Here are three examples of how different contexts demand different quality approaches:

High-Volume AEO/SEO Content

Most content people look at high-volume AEO/SEO content and see everything wrong with modern marketing. (I've been one of them.) Minimal research. Basic writing. No original insights. Cookie-cutter structure. If you apply traditional quality standards, this content often fails the test.

But this content has its own quality standards. Its first audience is AI or search engines, so the goal is a well-crafted strategy that targets search intent. If it answers the user’s query, it's doing exactly what it should. It doesn’t need to meet some high level of journalistic excellence because that's secondary in this context.

A mass of SEO posts can even give these engines a signal boost. You can then use traffic data from these low-effort posts to determine which ones to refresh into more traditionally high-quality pieces.

For example, we're working with a customer in the industrial operations space to produce over 20 articles per month that primarily target answer engines like ChatGPT, Perplexity, and Google’s AI Overviews.

The writing is solid, the style is on-brand, and the pieces contain new insights. But these articles would never meet our traditional quality standards. And for AEO, that’s ok!

Here are other aspects of quality in an AEO context:

You need to refresh more content more often compared to traditional SEO.

You need to answer a much higher number of queries and their variations.

You need to avoid AI-generated slop and apply your voice without going into high-falutin’ thought leadership territory.

More than anything, quality in AEO content comes from the craft and thought you put in the orchestration of the entire program versus the individual pieces.

Survey-Driven Whitepapers

Now flip to whitepapers. All those quality markers that high-volume SEO content can skip? They define the medium. You can’t have a good survey-driven whitepaper without original research, good writing, and professional design.

The purpose and the context both demand credibility markers. Enterprise buyers are using whitepapers to build internal business cases and justify significant budget decisions. They're sharing it with procurement teams, forwarding it to colleagues, and using it in board presentations. If your whitepaper looks hastily produced or lacks credible data, it undermines their (and your) professional reputation when they reference it internally.

We created a whitepaper for a fintech client that took several months to produce. Original surveys of fraud prevention experts, custom interviews, professional design and charts. It generated millions in pipeline from enterprise prospects who downloaded and shared it across their organization. The quality bar was high and deserved the amount of time and resources we spent.

LinkedIn Thought Leadership

And when it comes to LinkedIn thought leadership, an overproduced piece of content doesn’t perform any better than a simple one. In fact, most C-suites and founders we talk to say just posting regularly improves performance over time.

And that makes sense. The purpose is to educate, entertain, and distract (sometimes all three). The context is that it’s seen for a few precious seconds, if at all. And because of that, it’s largely inconsequential if a post goes out with a typo or missing punctuation. You don’t even need to use traditional capitalization if you don’t want to. Ironically, it may even signal authority, confidence, and a human at the keyboard.

LinkedIn rewards personality and timeliness over polish. It rewards posting consistently. A hastily written hot take about an industry development is more likely to outperform a carefully crafted evergreen post. At best, a post you spent hours worrying about will perform the same as the post you wrote in 10 minutes between meetings.

Think Twice. It’s Alright.

This might all sound like I’m saying you should lower your standards. But that’s not the case. I'm saying you should pause and determine what standard you should work to to decide where to invest your time, and how much to give.

Knowing how to make that judgment is the skill that separates successful content programs from busywork. The question is not "is this content objectively good?" It's "Is it good for its intended job?" Anything more risks draining resources.

When you treat quality as a strategic choice, not a universal standard, you'll know when “good enough” is the smarter play. That’s what delivers real returns.

.png)