Using just one AI model is like having a team of one.

You wouldn't hire a single person to be your developer, designer, writer, and analyst. So why expect one AI model to excel at everything?

Instead, spend time with different models. Learn which one has the brains for your task and the personality to fit your style.

Why Not Simply Stick to One Model?

"ChatGPT works just fine!" you might think; why make life unnecessarily complicated?

Chances are you've already run into problems in your AI usage: hallucinations, meh answers, failed schemes. When you only use one model, you might ascribe such issues to the technology in general ("See, AI sucks, it's all hype").

In reality — especially in 2025 — there's almost certainly a model that can do what you want if you knew which one to pick for your task.

Different AI models can provide drastically different results. So if you think everything is working fine with just one model, you're missing out.

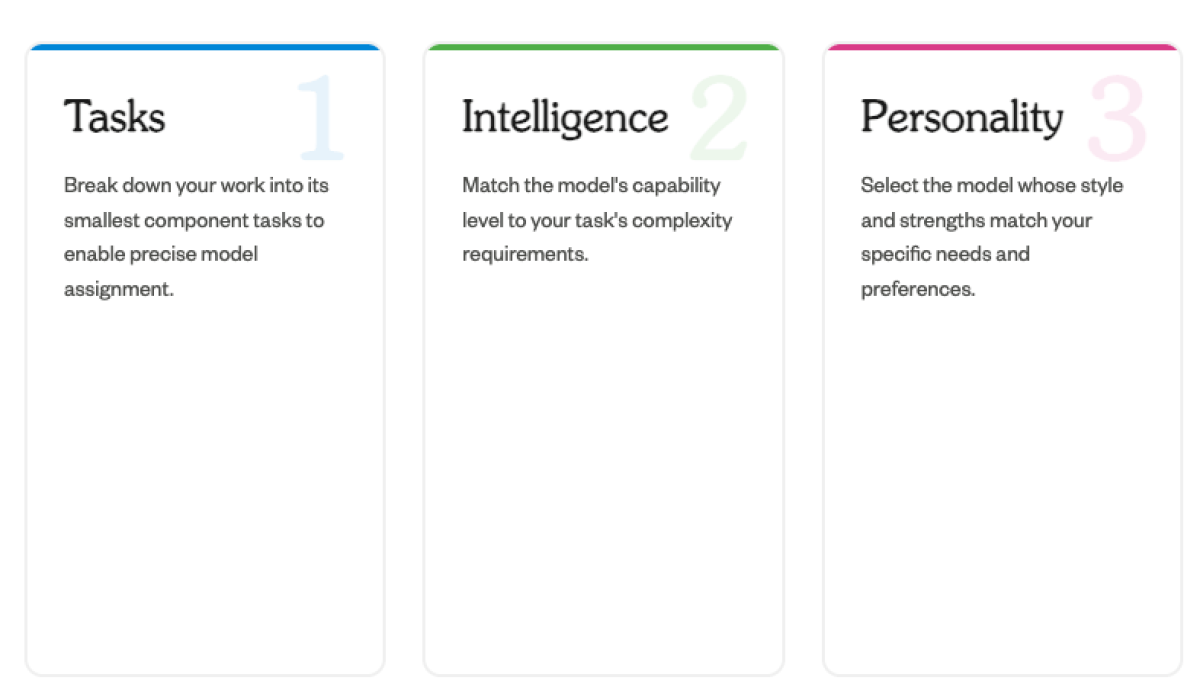

The TIP Method: Tasks → Intelligence → Personality

To understand which model to pick for a task, I recommend a three-step method:

Break down work into its component tasks, otherwise you can't assign the appropriate work to the most suitable model.

Shortlist models on intelligence needed based on the task's complexity.

Pick the final model based on personality.

Follow these steps, and you'll end up with the best model for your work.

Break Down Your Work Into Smaller Tasks

The first step is breaking down tasks into their smallest components. "Research, outline, draft, review" instead of "write article." "Analyze meeting transcript, determine follow-up actions, write summary for attendees" instead of "write meeting summary."

Some models are better at analysis, while others are better at writing. Some are great for coding, others for design. If you don't break down your work into smaller tasks, you can't assign the right specialist model to the appropriate task.

As a rule of thumb, the bigger and more important the work, project, or task, the more time to spend breaking it down into smaller tasks.

Determine How Much Intelligence You Need

After breaking down your tasks, intelligence is the next factor to consider when choosing a model. Unless you're highly focused on costs or speed, intelligence trumps all other factors, including personality.

What makes a model more intelligent or capable? Generally speaking, more capable models are better at understanding intent, fact-checking, handling more information, and delivering higher-quality outputs. They can maintain coherence across longer contexts, catch subtleties you didn't explicitly state, and produce work that needs less editing.

Box CEO Aaron Levie demonstrated this by testing earnings transcripts: GPT-5 caught subtle logical inconsistencies that "GPT-4.1 and other models that were state of the art just ~6 months ago" completely missed, showing how newer models excel at tasks requiring sophisticated reasoning.

Default to Higher Intelligence

In my — admittedly — anecdotal experience, roughly 80% of my everyday work tasks benefit from more intelligence, so I default to higher intelligence. It improves output and makes model selection easier.

For example, when OpenAI's o3 was out, and Claude's Sonnet and Opus models were at versions 3.7, I used o3 for 75%+ of my work, because I got better results from it. (Even though its obsession with tables got on my nerves quickly, I put up with it).

Then Claude's newer Opus and Sonnet models came out, and I shifted more of my work to Claude. With the intelligence gap reduced, I wasn't willing to tolerate o3's table-filled, dry answers anymore.

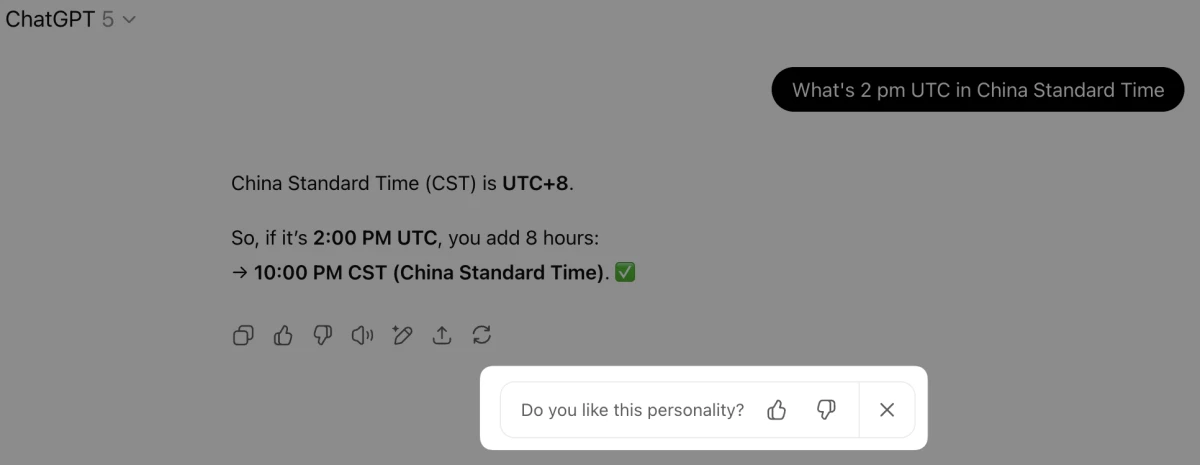

Pick the Personality That Matches Your Task

Intelligence being roughly equal, model choice comes down to personality. AI models have distinct personalities — a mix of style, strengths, weaknesses, and quirks.

As I wrote in my original review of ChatGPT Pro:

“According to Wharton professor Ethan Mollick, GPT-4 behaves like a 'focused, analytical workhorse that wants to get stuff done for you.' That description perfectly matches what we found testing ChatGPT Pro (o1 Pro). Think of o1 Pro as the nerdy 'PC guy' from those classic Apple commercials – all business, deeply focused, and ready to crunch through complex tasks. Claude, in contrast, is more like the creative 'Mac guy' – warm, adaptable, and excellent at grasping nuance.”

These different traits appear mostly because of how the models are trained: which data the AI labs use, the feedback they give about desired and undesired responses, and so on.

Some of the model characteristics are measurable, just like people can have hard skills ("good at coding," "bad at writing"). Others are more soft and subjective ("I don't like how Claude responds," "GPT-5 seems more creative").

Just as you might prefer working with John while someone else prefers Judy, people also click differently with AI models.

For example, at Animalz, we run our email newsletter copy through parallel workflows: one using GPT-4o, another using Claude Sonnet. I consistently prefer Claude's version (more professional, measured tone), while a coworker prefers GPT-4o's casual, emoji-friendly style.

Same input, completely different outputs, with neither objectively better or worse — a matter of pure personal preference.

Picking the right personality for a task therefore breaks down into two steps, and in this order:

Understand the hard skills required for the task, and match those to the model known to have the best capabilities for said task.

Hard skills being roughly equal, pick the model with the soft aspects you like or need.

Here's an example of how this works: in my experience, ChatGPT's Pro models are more precise than Claude in picking up all details from a large number of documents. So if I want an exhaustive analysis of multiple meeting or interview transcripts, I'd pick ChatGPT Pro — a case of hard trumps soft.

When it comes to making a pleasant-sounding, nuanced summary of a single meeting, I prefer Claude over ChatGPT's Pro models; they sound more buttoned up and analytical compared to Claude. Hard requirements being equal, soft wins.

The more time you spend with different models, the more intuitive your choices become. But beware confusing the nature of hard skills. For example, making a good outline isn't a creative task but an analytical one. Writing the headlines within those outlines once it's established is a creative task.

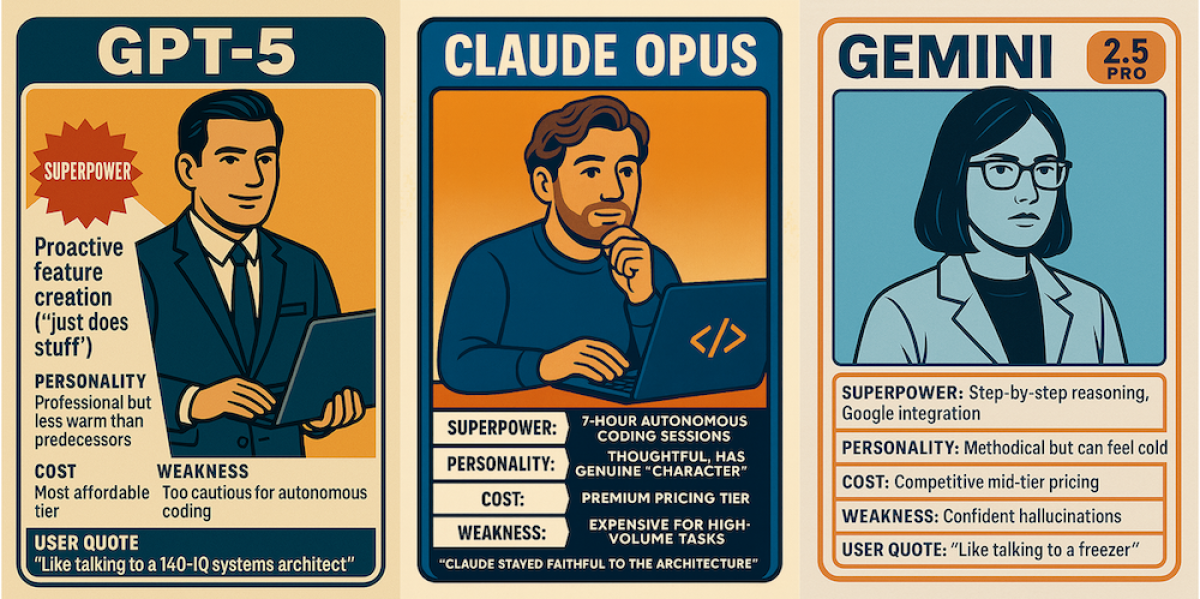

A Snapshot of Model Personalities

If you want to distribute guidance around model usage through your team or organization, you can track model behavior with reference sheets or baseball style cards that capture each model's personality.

Here are some examples to get you started:

GPT-5

Superpower: Proactive feature creation ("just does stuff")

Personality: Professional but less warm than predecessors

Cost: Most affordable tier

Weakness: Too cautious for autonomous coding

User quote: "Like talking to a 140-IQ systems architect"

Claude Opus

Superpower: 7-hour autonomous coding sessions

Personality: Thoughtful, has genuine "character"

Cost: Premium pricing tier

Weakness: Expensive for high-volume tasks

User quote: "Claude stayed faithful to the architecture"

Gemini 2.5 Pro

Superpower: Step-by-step reasoning, Google integration

Personality: Methodical but can feel cold

Cost: Competitive mid-tier pricing

Weakness: Confident hallucinations

User quote: "Like talking to a freezer"

The fascinating thing about modern AI interactions is how deeply personal the experience becomes. As Alex Duffy from Every put it about losing access to GPT-4.5: "I'm kind of mad... I felt like I had a feel for it." Users develop genuine emotional attachments to specific models, and these subjective preferences matter as much as any benchmark.

Pit Models Against One Another

Not every model choice will be clear cut (even with the TIP method). Sometimes you just want a second opinion, or the best of both worlds.

Running the same prompt and context through more than one model is only a few extra mouse clicks of work. You can even let them criticize and synthesize each other's output.

Two simple multi-model patterns I use often that will get you superior results:

Parallel test: Run identical prompts across two models and pick your favorite output.

Critique loop: Have model A review the output of model B and vice versa. (You can even define formal scoring criteria for the models to use.)

If you switch between models often, some tools make such multi-model workflows easier.

You can branch conversations in multi‑model chat interfaces like TypingMind to compare answers side‑by‑side. Lex, an AI writing tool, lets you switch between all popular models even within one conversation. And more sophisticated platforms like AirOps allow you to build entire workflows where each step can use a different AI model.

Nothing Beats Mileage

The TIP method provides the foundation, but ultimately you need to spend real time with these models — just like building any working relationship.

A week with Claude will teach you more about its personality than any review could. A few sessions with GPT-5 will reveal quirks no benchmark captures.

Once you know even two or three models well, you'll instinctively match tasks to the right one. You'll have evolved from an AI user to an AI manager, working with a specialized team instead of being at the whim of a single chatbot.

Start today: For the next week, use at least two different models. Apply the TIP method to one real project. You'll never be AI model monogamous again.

Frequently Asked Questions

If AI agents can break down tasks automatically, do we still need manual model selection?

If you're familiar with AI agents (systems that can break down objectives into tasks and execute them autonomously), you might wonder if breaking down tasks is still necessary. After all, agents can take a goal, break it down into tasks, and use tools or queries to execute them.

The problem is that agents use only one model. Watch reasoning models in action, and you'll see they execute a list of tasks. But it's still one model doing everything, so you miss the benefit of tapping into different models' strengths and weaknesses.

Won't GPT-5's router eliminate the need for manual model selection?

GPT-5 introduced a router that automatically selects between different internal models based on task complexity. While this is a step forward for casual users, it doesn't eliminate the need for deliberate model selection, especially for professional work.

The router optimizes for general performance and cost, not your specific needs. It can't know that you prefer Claude's writing style for marketing copy, or that Gemini's structured output works better for your data analysis workflow. More importantly, you're still limited to OpenAI's models.

Routers will get smarter, but multiple frontier and specialist models will continue to exist. Knowing their differences remains crucial for professional workflows.

How do I actually know which model is more intelligent?

There are formal benchmarks, but for most everyday work, you can follow these practical rules:

Newer generation models are typically more powerful (e.g., GPT-5 vs. GPT-4, Claude Opus vs. Claude 3.5)

Each generation usually comes in varying strengths (e.g., GPT-4 vs. GPT-4 Turbo, Claude Opus vs. Claude Sonnet)

Reasoning models are more capable than non-reasoning models

When in doubt, test the same complex task across models and compare results

The best measure? Your actual work. Run your most challenging task through different models and see which one requires less editing, catches more edge cases, or produces more usable output.